Sequence homology searching¶

In this chapter we’ll talk about using pairwise alignment to search databases of biological sequences with the goal of identifying sequence homology. We previously defined homology between a pair of sequences to mean that those sequences are derived from a common ancestral sequence. Homology searching is an essential part of making inferences about where a biological sequence came from, and/or what it does. In most cases, if you have an unannotated biological sequence, such as the following protein sequence, it’s very hard (really, impossible) to know what it is without more information.

What a researcher will often do is search this sequence, their query, against some reference database of annotated sequences to learn what function the sequence performs (if the reference database contains functional annotation of sequences) and/or what organisms are likely to encode this sequence in their genome (if the reference database contains taxonomic annotation of sequences).

Exercise

Whose genome is the following sequence encoded in? What is its function? Take a minute now to answer these questions using the Protein BLAST homology search tool on the NCBI website.

>mystery-sequence1

MFVFLVLLPLVSSQCVNLTTRTQLPPAYTNSFTRGVYYPDKVFRSSVLHSTQDLFLPFFS

NVTWFHAIHVSGTNGTKRFDNPVLPFNDGVYFASTEKSNIIRGWIFGTTLDSKTQSLLIV

NNATNVVIKVCEFQFCNDPFLGVYYHKNNKSWMESEFRVYSSANNCTFEYVSQPFLMDLE

GKQGNFKNLREFVFKNIDGYFKIYSKHTPINLVRDLPQGFSALEPLVDLPIGINITRFQT

LLALHRSYLTPGDSSSGWTAGAAAYYVGYLQPRTFLLKYNENGTITDAVDCALDPLSETK

CTLKSFTVEKGIYQTSNFRVQPTESIVRFPNITNLCPFGEVFNATRFASVYAWNRKRISN

CVADYSVLYNSASFSTFKCYGVSPTKLNDLCFTNVYADSFVIRGDEVRQIAPGQTGKIAD

YNYKLPDDFTGCVIAWNSNNLDSKVGGNYNYLYRLFRKSNLKPFERDISTEIYQAGSTPC

NGVEGFNCYFPLQSYGFQPTNGVGYQPYRVVVLSFELLHAPATVCGPKKSTNLVKNKCVN

FNFNGLTGTGVLTESNKKFLPFQQFGRDIADTTDAVRDPQTLEILDITPCSFGGVSVITP

GTNTSNQVAVLYQDVNCTEVPVAIHADQLTPTWRVYSTGSNVFQTRAGCLIGAEHVNNSY

ECDIPIGAGICASYQTQTNSPRRARSVASQSIIAYTMSLGAENSVAYSNNSIAIPTNFTI

SVTTEILPVSMTKTSVDCTMYICGDSTECSNLLLQYGSFCTQLNRALTGIAVEQDKNTQE

VFAQVKQIYKTPPIKDFGGFNFSQILPDPSKPSKRSFIEDLLFNKVTLADAGFIKQYGDC

LGDIAARDLICAQKFNGLTVLPPLLTDEMIAQYTSALLAGTITSGWTFGAGAALQIPFAM

QMAYRFNGIGVTQNVLYENQKLIANQFNSAIGKIQDSLSSTASALGKLQDVVNQNAQALN

TLVKQLSSNFGAISSVLNDILSRLDKVEAEVQIDRLITGRLQSLQTYVTQQLIRAAEIRA

SANLAATKMSECVLGQSKRVDFCGKGYHLMSFPQSAPHGVVFLHVTYVPAQEKNFTTAPA

ICHDGKAHFPREGVFVSNGTHWFVTQRNFYEPQIITTDNTFVSGNCDVVIGIVNNTVYDP

LQPELDSFKEELDKYFKNHTSPDVDLGDISGINASVVNIQKEIDRLNEVAKNLNESLIDL

QELGKYEQYIKWPWYIWLGFIAGLIAIVMVTIMLCCMTSCCSCLKGCCSCGSCCKFDEDD

SEPVLKGVKLHYT

In the context of database searching, a query sequence and a reference sequence that we hypothesize to be homologous can be identical to one another, or they can differ as a result of mutation events. When sequences differ, we’re often then interested in how much they differ, or their pairwise similarity, which can help us identify the most closely related of several homologs in the reference database. There is an important distinction in the terms homology and similarity: homology is a discrete variable, and similarity is a continuous variable. A pair of biological sequences either are or are not derived from a common ancestor, but they can be more or less similar to each other. Saying that two sequences are 80% homologous doesn’t make sense. What people generally mean when they say this is that two sequences are 80% similar, and as a result they are hypothesizing homology between the sequences.

Similarity between a pair of sequences can be computed in a few different ways. In this text, unless otherwise noted, we’ll compute similar as: \(Similarity = 1 - Hamming\ distance\). Recall that Hamming distance is the fraction of positions that differ between aligned sequences. Similarity is therefore the inverse of that: the fraction of positions that do not differ between aligned sequences.

Defining the problem¶

As mentioned above, if we want to perform a homology search we’ll have one or more query sequences, and for each we want to know which sequence(s) in a reference database it is most similar to.

Sequence homology searching can be implemented in a few ways. In this chapter, we’ll use the local alignment function that we worked with in the Pairwise Alignment chapter, local_pairwise_align_ssw, run it many times to search one query sequence against many reference sequences, and investigate the highest scoring alignment(s) to identify the best database match. Remember that you can always get help with a function by passing it as an argument to help:

from skbio.alignment import local_pairwise_align_ssw

help(local_pairwise_align_ssw)

Help on function local_pairwise_align_ssw in module skbio.alignment._pairwise:

local_pairwise_align_ssw(sequence1, sequence2, **kwargs)

Align query and target sequences with Striped Smith-Waterman.

State: Experimental as of 0.4.0.

Parameters

----------

sequence1 : DNA, RNA, or Protein

The first unaligned sequence

sequence2 : DNA, RNA, or Protein

The second unaligned sequence

Returns

-------

tuple

``TabularMSA`` object containing the aligned sequences, alignment score

(float), and start/end positions of each input sequence (iterable

of two-item tuples). Note that start/end positions are indexes into the

unaligned sequences.

Notes

-----

This is a wrapper for the SSW package [1]_.

For a complete list of optional keyword-arguments that can be provided,

see ``skbio.alignment.StripedSmithWaterman``.

The following kwargs will not have any effect: `suppress_sequences`,

`zero_index`, and `protein`

If an alignment does not meet a provided filter, `None` will be returned.

References

----------

.. [1] Zhao, Mengyao, Wan-Ping Lee, Erik P. Garrison, & Gabor T.

Marth. "SSW Library: An SIMD Smith-Waterman C/C++ Library for

Applications". PLOS ONE (2013). Web. 11 July 2014.

http://www.plosone.org/article/info:doi/10.1371/journal.pone.0082138

See Also

--------

skbio.alignment.StripedSmithWaterman

When our reference database starts getting hundreds of millions of bases long (as would be the case if we were searching against 97% OTUs from the Greengenes small-subunit ribosomal RNA (SSU rRNA) reference database), billions of bases long (as would be the case if we were searching against the human genome) or trillions of bases long (as would be the case if we were searching against the NCBI non-redundant nucleotide database), runtime becomes an important consideration. For that reason, learning about heuristic algorithms is an essential part of learning about sequence homology searching. Heuristic algorithms apply some rules (i.e., heuristics) to approximate the correct solution to a problem in a fraction of the runtime that would be required if we wanted to be guaranteed to find the correct solution. Heuristic algorithms are very common in bioinformatics, and we’ll use them in several other places in this book.

While we’ll be aligning nucleotide sequences in this chapter, the same concepts apply to protein homology searching.

Loading annotated sequences¶

The first thing we’ll do as we learn about sequence homology searching is load some annotated sequences. The sequences that we’re going to work with are derived from the Greengenes database. Greengenes is a database of 16S rRNA gene sequences, a component of the archaeal and bacterial ribosome (the molecular machine that drives translation of mRNA to proteins). This gene is of a lot of interest to biologists because it’s one of about 200 genes that are encoded in the genomes of all known cellular organisms. The sequences in Greengenes are taxonomically annotated, meaning that we’ll have a collection of gene sequences and the taxonomic identity of the organism whose genome the sequence is found in. If we search an unannotated 16S rRNA query sequence against this database, we can make inferences about what organism our query sequence is from.

First, let’s load Greengenes into a list of skbio.DNA sequence objects, and associate the taxonomy of each sequence as sequence metadata.

# This cell performs some configuration for this notebook. It's hidden by

# default because it's not relevant to the content of this chapter. You'll

# occasionally notice that I hide this type of information so it's not

# distracting.

%pylab inline

from IPython.core import page

page.page = print

Populating the interactive namespace from numpy and matplotlib

import qiime_default_reference as qdr

import skbio

def load_taxonomy_reference_database(verbose=True):

# Load the taxonomic data

reference_taxonomy = {}

for e in open(qdr.get_reference_taxonomy()):

seq_id, seq_tax = e.strip().split('\t')

reference_taxonomy[seq_id] = seq_tax

# Load the reference sequences, and associate the taxonomic annotation with

# each as metadata

reference_db = []

for e in skbio.io.read(qdr.get_reference_sequences(), format='fasta', constructor=skbio.DNA):

if e.has_degenerates():

# For the purpose of this lesson, we're going to ignore sequences that contain

# degenerate characters (i.e., characters other than A, C, G, or T)

continue

seq_tax = reference_taxonomy[e.metadata['id']]

e.metadata['taxonomy'] = seq_tax

reference_db.append(e)

if verbose:

print("%s sequences were loaded from the reference database." % len(reference_db))

return reference_taxonomy, reference_db

reference_taxonomy, reference_db = load_taxonomy_reference_database()

88452 sequences were loaded from the reference database.

Next, we’ll just inspect a couple of the sequences we loaded. Notice how the specificity of our taxonomic annotations (i.e., how many taxonomic levels are annotated and unknown) differs for different sequences.

reference_db[0]

DNA

-----------------------------------------------------------------------

Metadata:

'description': ''

'id': '1111883'

'taxonomy': 'k__Bacteria; p__Gemmatimonadetes; c__Gemm-1; o__; f__;

g__; s__'

Stats:

length: 1428

has gaps: False

has degenerates: False

has definites: True

GC-content: 61.90%

-----------------------------------------------------------------------

0 GCTGGCGGCG TGCCTAACAC ATGTAAGTCG AACGGGACTG GGGGCAACTC CAGTTCAGTG

60 GCAGACGGGT GCGTAACACG TGAGCAACTT GTCCGACGGC GGGGGATAGC CGGCCCAACG

...

1320 GCCGCGGTGA ATACGTTCCC GGGCCTTGTA CACACCGCCC GTCACGCCAT GGAAGCCGGA

1380 GGGACCCGAA ACCGGTGGGC CAACCGCAAG GGGGCAGCCG TCTAAGGT

reference_db[-1]

DNA

----------------------------------------------------------------------

Metadata:

'description': ''

'id': '4483258'

'taxonomy': 'k__Archaea; p__Crenarchaeota; c__Thermoprotei;

o__Thermoproteales; f__Thermoproteaceae; g__; s__'

Stats:

length: 2123

has gaps: False

has degenerates: False

has definites: True

GC-content: 58.36%

----------------------------------------------------------------------

0 CTGGTTGATC CTGCCGGACC CGACCGCTAT CGGGGTGGGG CTTAGCCATG CGAGTCAAGC

60 GCCCCAGGGA CCCGCTGGGG TGCGGCGCAC GGCTCAGTAA CACGTGGCCA ACCTACCCTC

...

2040 ATAATCTCCT TATTGTCTGA TCCTTATGCA TTTTCCTTTG GCCCATCCCG TGAATACGCG

2100 CGGTGAATAC GTCCCTGCCC CTT

For the sake of runtime, we’re going to work through this chapter using a random sample of sequences from this database. Here we’ll use Python’s random module to select sequences at random.

import random

reference_db = random.sample(reference_db, k=5000)

print("%s sequences are present in the subsampled database." % len(reference_db))

5000 sequences are present in the subsampled database.

We’ll also extract some sequences from Greengenes to use as query sequences in our database searches. This time we won’t annotate them (to simulate not knowing what organisms they’re from). We’ll also trim these sequences so they’re shorter than the full length references. This will simulate obtaining a partial gene sequence, as is most common with the current sequencing technologies (as of this writing), but will also help to make the examples run faster.

Note that some of our query sequences may also be in our subsampled reference database and some won’t. This is realistic: sometimes we’re working with sequences that are exact matches to known sequences, and sometimes we’re working with sequences that don’t match any known sequences (or at least any in the reference database that we’re working with).

def load_taxonomy_query_sequences(start_position=100, length=200):

queries = []

for e in skbio.io.read(qdr.get_reference_sequences(), format='fasta', constructor=skbio.DNA):

if e.has_degenerates():

# For the purpose of this lesson, we're going to ignore sequences that contain

# degenerate characters (i.e., characters other than A, C, G, or T)

continue

e = e[start_position:start_position + length]

queries.append(e)

return queries

queries = load_taxonomy_query_sequences()

queries = random.sample(queries, k=50)

Let’s inspect a couple of the query sequences that we’ll work with.

queries[0]

DNA

---------------------------------------------------------------------

Metadata:

'description': ''

'id': '1110988'

Stats:

length: 200

has gaps: False

has degenerates: False

has definites: True

GC-content: 46.50%

---------------------------------------------------------------------

0 GGTGAGTAAC ACGTGAGCAA CCTGCCTTTG AGAGAGGGAT AGCTTCGGGA AACTGATGGT

60 AATACCTCAT AACATATATT TAAGGCATCT TAGATATATC AAAGATTTAT CACTCAAAGA

120 TGGGCTCGCG TCTGATTAGC TGGTTGGTGA GGTAGCGGCT CACCAAGGCG ACGATCAGTA

180 GCCGGACTGA GAGGTTGAAC

queries[-1]

DNA

---------------------------------------------------------------------

Metadata:

'description': ''

'id': '547906'

Stats:

length: 200

has gaps: False

has degenerates: False

has definites: True

GC-content: 51.50%

---------------------------------------------------------------------

0 GTGGGCAACC TGCCCTATAC TGGGGAATAA TCACTGGAAA CGGTGACTAA TACCGCATGT

60 CATTGCGAGG GGGCATCCTC TTGCAAGAAA AGGAATTTAT TTCGGTATAG GATGGGCCCG

120 CATCTGATTA GCTAGTTGGT GAGATAACAG CCCACCAAGG CGACGATCAG TAGCCGACCT

180 GAGAGGGTGA TCGGCCACAT

Defining the problem¶

The problem that we are going to address here is as follows. We now have a query sequence (\(q_i\)) which is not taxonomically annotated (meaning we don’t know the taxonomy of the organism whose genome it is found in), and a reference database (\(R\)) of taxonomically annotated sequences (\(r_1, r_2, r_3, ... r_n\)). We want to infer a taxonomic annotation for \(q_i\). We’ll do this by identifying the most similar sequence(s) in \(R\) and associating their taxonomy with \(q_i\). Because we actually do know the taxonomy of \(q_i\) (to the extent that we trust the annotations in \(R\)), we can evaluate how well this approach works.

There are a few realistic features of the situation that we’ve set up here that I want you to be aware of.

All of the query and reference sequences are homologous. In this case, they are all sequences of the 16S rRNA gene from archaea and bacteria. This may or may not be the case in real-world applications. Sometimes you’ll work with gene-specific databases such as Greengenes, and sometimes you’ll work with non-specific databases such as the NCBI nucleotide database (nr). Regardless, the search process is similar.

The distance between each query sequence and its most closely related sequences in \(R\) will vary widely. Sometimes \(q\) will be an exact match to a reference sequence \(r_i\), and sometimes we may have as little as \(50\%\) similarity.

As we work through the next sections, imagine that we’re exploring scaling this system up, so that instead of searching just one or a few query sequences against the reference database, we ultimately want to apply this to search millions of sequences against the database. This would be the real-world problem we faced if we had collected 16S rRNA sequences from the environment (which would of course be unannotated) using high-throughput DNA sequencing.

A complete homology search function¶

Let’s define a homology search function that aligns each provided query sequences \(q_i\) with each of our reference database sequences (\(r_1, r_2, r_3, ... r_n\)). This function will take as input one or more query sequences, and the reference database. We’ll call the top scoring alignments for each \(q_i\) the best hits, and we’ll specifically request some number (n) of best hits for each \(q_i\). The output of this function will be a summary of the n best hits for each query sequence, including some technical information about the alignment and the taxonomy associated with the corresponding reference sequence. We’ll then review the taxonomy annotations for our best hits, and from those make an inference about the taxonomy annotation for \(q_i\).

Spend a minute looking at this function and try to understand what it’s doing.

import pandas as pd

from skbio.alignment import local_pairwise_align_ssw

def local_alignment_search(queries, reference_db, n=5,

aligner=local_pairwise_align_ssw):

results = []

indices = []

for q in queries:

# first we'll compute all of the alignments and their associated scores

hits = []

for r in reference_db:

aln, score, _ = aligner(q, r)

hits.append([r.metadata['id'], score, aln,

r.metadata['taxonomy']])

# then we reverse-sort them by score, and return the n highest

# scoring alignments (this needs to be updated so we only

# ever keep track of the n highest scoring alignments)

best_hits = sorted(hits, key=lambda e: e[1], reverse=True)[:n]

if len(best_hits) == 0:

# if there are no hits, log that information

indices.append((q.metadata['id'], "no matches"))

results.append(("n/a", np.nan, np.nan, np.nan))

else:

# otherwise compile and track some information about the n

# best hits

for r_id, score, aln, r_tax in best_hits:

percent_similarity = (100 * (1. - aln[0].distance(aln[1])))

aln_length = aln.shape[1]

indices.append((q.metadata['id'], r_id))

results.append((r_tax, percent_similarity,

aln_length, score))

index = pd.MultiIndex.from_tuples(indices, names=['query', 'reference'])

columns = ['reference taxonomy', 'percent similarity',

'alignment length', 'score']

results = pd.DataFrame(results, index=index, columns=columns)

return results

Now let’s perform some database searches. You can run the remaining code cells in this section a few times to experiment with searching different query sequences against the same reference database.

This next cell, which is the one that actually performs the database searches, will take a little bit of time to run (maybe up to a minute or two). There is some code in this cell that will track the runtime. As it’s running, think about how many query sequences we’re searching against how many reference sequences, and refer back to the number of sequences in the full reference database. Does this strategy seem scalable to millions of sequences, which as mentioned above might be our ultimate goal? When you know the per-sequence runtime of this search, estimate how long it would take to do this in seconds for one million sequences. Convert the time in seconds to a unit that will be more meaningful to you.

import time

start_time = time.time()

current_queries = random.sample(queries, k=4)

results = local_alignment_search(current_queries, reference_db)

stop_time = time.time()

print("Runtime: %1.4f sec per query" % ((stop_time - start_time) / len(current_queries)))

results

Runtime: 2.9513 sec per query

| reference taxonomy | percent similarity | alignment length | score | ||

|---|---|---|---|---|---|

| query | reference | ||||

| 354971 | 211339 | k__Bacteria; p__Firmicutes; c__Clostridia; o__... | 95.918367 | 196 | 352 |

| 543173 | k__Bacteria; p__Firmicutes; c__Bacilli; o__Lac... | 90.816327 | 196 | 302 | |

| 354905 | k__Bacteria; p__Firmicutes; c__Bacilli; o__Lac... | 86.666667 | 195 | 258 | |

| 744387 | k__Bacteria; p__Firmicutes; c__Bacilli; o__Lac... | 86.082474 | 194 | 251 | |

| 297314 | k__Bacteria; p__Firmicutes; c__Bacilli; o__Lac... | 85.204082 | 196 | 247 | |

| 326869 | 4445752 | k__Bacteria; p__Proteobacteria; c__Alphaproteo... | 95.000000 | 200 | 350 |

| 349639 | k__Bacteria; p__Proteobacteria; c__Alphaproteo... | 92.500000 | 200 | 325 | |

| 616682 | k__Bacteria; p__Proteobacteria; c__Alphaproteo... | 92.500000 | 200 | 325 | |

| 766020 | k__Bacteria; p__Proteobacteria; c__Alphaproteo... | 92.000000 | 200 | 320 | |

| 4714 | k__Bacteria; p__Proteobacteria; c__Alphaproteo... | 92.000000 | 200 | 320 | |

| 1111024 | 4438586 | k__Bacteria; p__Gemmatimonadetes; c__Gemmatimo... | 88.613861 | 202 | 284 |

| 4452903 | k__Bacteria; p__Gemmatimonadetes; c__Gemmatimo... | 86.124402 | 209 | 276 | |

| 212819 | k__Bacteria; p__Gemmatimonadetes; c__Gemmatimo... | 87.254902 | 204 | 269 | |

| 1148064 | k__Bacteria; p__Gemmatimonadetes; c__Gemmatimo... | 86.893204 | 206 | 266 | |

| 1813677 | k__Bacteria; p__Gemmatimonadetes; c__Gemmatimo... | 86.274510 | 204 | 264 | |

| 1726426 | 1726426 | k__Bacteria; p__Proteobacteria; c__Gammaproteo... | 100.000000 | 200 | 400 |

| 197286 | k__Bacteria; p__Proteobacteria; c__Gammaproteo... | 97.500000 | 200 | 375 | |

| 540402 | k__Bacteria; p__Proteobacteria; c__Gammaproteo... | 97.000000 | 200 | 370 | |

| 4217232 | k__Bacteria; p__Proteobacteria; c__Gammaproteo... | 97.000000 | 200 | 370 | |

| 736735 | k__Bacteria; p__Proteobacteria; c__Gammaproteo... | 96.500000 | 200 | 365 |

Now, let’s try to answer our initial question: what is the most likely taxonomic annotation for each of our query sequences? Spend a few minutes reviewing this information, and write down what you think the most likely taxonomic annotation is for each of the query sequences. Here are some hints to help you out:

The

k,p,c,o,f,g, andsrefer to kingdom, phylum, class, order, family, genus, and species, respectively. If you see an annotation for a reference sequence that looks likeg__, that means that the genus is unknown for that sequence.Just as the reference taxonomy annotations don’t always go down to the species level, your taxonomic annotations don’t have to either. Not assigning at a given level implies that you’re uncertain about what the annotation should be at that level, and it’s usually better just to indicate that you’re uncertain rather than make a bad guess. If you’re uncertain of what the species is, assign the query

s__and try to decide what the most likely genus is. If you’re uncertain of the genus, assigng__, and try to decide what the most likely family is…As you look at each of the reference taxonomy annotations below, refer back to the table above to look at the percent similarity between each query and reference, and maybe the length of the alignments and their scores. These values give you an idea of how confident you should be in each of your taxonomic annotations.

for q in current_queries:

q_id = q.metadata['id']

print('Closest taxonomies for query %s (in order):' % q_id)

for e in results['reference taxonomy'][q_id]:

print(' ', e)

print()

Closest taxonomies for query 354971 (in order):

k__Bacteria; p__Firmicutes; c__Clostridia; o__Clostridiales; f__; g__; s__

k__Bacteria; p__Firmicutes; c__Bacilli; o__Lactobacillales; f__Lactobacillaceae; g__Lactobacillus; s__

k__Bacteria; p__Firmicutes; c__Bacilli; o__Lactobacillales; f__Lactobacillaceae; g__Lactobacillus; s__

k__Bacteria; p__Firmicutes; c__Bacilli; o__Lactobacillales; f__Lactobacillaceae; g__Lactobacillus; s__

k__Bacteria; p__Firmicutes; c__Bacilli; o__Lactobacillales; f__; g__; s__

Closest taxonomies for query 326869 (in order):

k__Bacteria; p__Proteobacteria; c__Alphaproteobacteria; o__; f__; g__; s__

k__Bacteria; p__Proteobacteria; c__Alphaproteobacteria; o__Rhizobiales; f__; g__; s__

k__Bacteria; p__Proteobacteria; c__Alphaproteobacteria; o__Rhizobiales; f__Hyphomicrobiaceae; g__Rhodoplanes; s__

k__Bacteria; p__Proteobacteria; c__Alphaproteobacteria; o__Rhizobiales; f__Bradyrhizobiaceae; g__; s__

k__Bacteria; p__Proteobacteria; c__Alphaproteobacteria; o__Rhizobiales; f__Beijerinckiaceae; g__; s__

Closest taxonomies for query 1111024 (in order):

k__Bacteria; p__Gemmatimonadetes; c__Gemmatimonadetes; o__Gemmatimonadales; f__Ellin5301; g__; s__

k__Bacteria; p__Gemmatimonadetes; c__Gemmatimonadetes; o__; f__; g__; s__

k__Bacteria; p__Gemmatimonadetes; c__Gemmatimonadetes; o__; f__; g__; s__

k__Bacteria; p__Gemmatimonadetes; c__Gemmatimonadetes; o__; f__; g__; s__

k__Bacteria; p__Gemmatimonadetes; c__Gemmatimonadetes; o__KD8-87; f__; g__; s__

Closest taxonomies for query 1726426 (in order):

k__Bacteria; p__Proteobacteria; c__Gammaproteobacteria; o__Enterobacteriales; f__Enterobacteriaceae; g__Serratia; s__

k__Bacteria; p__Proteobacteria; c__Gammaproteobacteria; o__Enterobacteriales; f__Enterobacteriaceae; g__; s__

k__Bacteria; p__Proteobacteria; c__Gammaproteobacteria; o__Enterobacteriales; f__Enterobacteriaceae; g__; s__

k__Bacteria; p__Proteobacteria; c__Gammaproteobacteria; o__Enterobacteriales; f__Enterobacteriaceae; g__Klebsiella; s__

k__Bacteria; p__Proteobacteria; c__Gammaproteobacteria; o__Enterobacteriales; f__Enterobacteriaceae; g__; s__

Because we have taxonomic annotations for all of the Greengenes sequences (though as you probably have noticed by now, they differ in their specificity), we can next look at taxonomy associated with each of our queries in Greengenes. How do your annotations compare to those from Greengenes, which we’ll print out in the next cell?

for q in current_queries:

q_id = q.metadata['id']

print('Known taxonomy for query %s:\n %s' % (q_id, reference_taxonomy[q_id]))

print()

Known taxonomy for query 354971:

k__Bacteria; p__Firmicutes; c__Bacilli; o__Lactobacillales; f__Lactobacillaceae; g__Lactobacillus; s__

Known taxonomy for query 326869:

k__Bacteria; p__Proteobacteria; c__Alphaproteobacteria; o__; f__; g__; s__

Known taxonomy for query 1111024:

k__Bacteria; p__Gemmatimonadetes; c__Gemmatimonadetes; o__Gemmatimonadales; f__; g__; s__

Known taxonomy for query 1726426:

k__Bacteria; p__Proteobacteria; c__Gammaproteobacteria; o__Enterobacteriales; f__Enterobacteriaceae; g__Serratia; s__

Reducing the runtime for database searches¶

In the examples above, it’s taking on the order of 5-15 seconds to search a single sequence against our subset of Greengenes. This makes sense when you think about the computations that are being performed. For every sequence in our reference database (5000, if you haven’t modified the database subsampling step) it is computing the \(F\) and \(T\) matrices described in the Pairwise Alignment chapter, and then tracing back the matrix to compute the aligned sequences. Given all of that, the fact that computation only takes 5-15 seconds is pretty incredible. However, that doesn’t change the fact that this doesn’t scale to real-world applications because we’d have to wait way too long for results. Performing all pairwise alignments is prohibitively expensive for database searching.

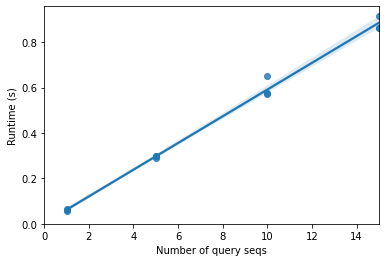

As we discussed in the previous chapter, the run time of pairwise alignment scales quadratically with sequence length. Database searching, at least in the example we’re exploring in this chapter, is a bit of a different problem however. Our sequence lengths aren’t changing, but rather it takes a long time because we’re performing a computationally expensive step, pairwise alignment, many times. Our database is fixed in that the number of sequences in it doesn’t change and the sequences themselves don’t change. Our query sequences are all exactly the same length in this example (remember that we set that above, when we sliced a single region from reference database sequences to create our query sequences). Let’s explore how the runtime of this database search scales under these constraints.

import pandas as pd

import itertools

def tabulate_local_alignment_search_runtime(queries, reference_db, n_query_sequences,

n_reference_sequences, search_function):

data = []

# we'll iterate over the pairs of number of query sequences

# and number of reference sequences, and compute the runtime

# of the database search three times for each pair (so we

# have some idea of the variance in the runtimes). this is

# achieved here with a nested for loop (i.e., a for loop

# within a for loop).

for nq, nr in itertools.product(n_query_sequences, n_reference_sequences):

for i in range(3):

# select nq query sequences at random

current_queries = random.sample(queries, k=nq)

# select nr reference sequences at random

temp_reference_db = random.sample(reference_db, k=nr)

# run the search and store its runtime

start_time = time.time()

_ = search_function(current_queries, temp_reference_db)

stop_time = time.time()

median_query_sequence_len = np.median([len(q) for q in current_queries])

median_reference_sequence_len = np.median([len(r) for r in temp_reference_db])

data.append((nq, nr, median_query_sequence_len, median_reference_sequence_len,

stop_time - start_time))

runtimes = pd.DataFrame(data=np.asarray(data),

columns=["Number of query seqs", "Number of reference seqs",

"Median query seq length", "Median reference seq length",

"Runtime (s)"] )

return runtimes

# we'll temporarily work with a smaller reference database

# so this will run a lot faster. this will be of fixed size.

n_reference_sequences = [100]

# since our database is smaller, we can work with some slightly

# larger numbers of sequences.

n_query_sequences = [1, 5, 10, 15]

local_alignment_search_runtimes = tabulate_local_alignment_search_runtime(queries, reference_db,

n_query_sequences, n_reference_sequences,

local_alignment_search)

local_alignment_search_runtimes

| Number of query seqs | Number of reference seqs | Median query seq length | Median reference seq length | Runtime (s) | |

|---|---|---|---|---|---|

| 0 | 1.0 | 100.0 | 200.0 | 1434.0 | 0.061491 |

| 1 | 1.0 | 100.0 | 200.0 | 1425.0 | 0.063632 |

| 2 | 1.0 | 100.0 | 200.0 | 1422.0 | 0.055392 |

| 3 | 5.0 | 100.0 | 200.0 | 1420.0 | 0.288887 |

| 4 | 5.0 | 100.0 | 200.0 | 1431.0 | 0.298724 |

| 5 | 5.0 | 100.0 | 200.0 | 1430.5 | 0.298148 |

| 6 | 10.0 | 100.0 | 200.0 | 1412.0 | 0.570900 |

| 7 | 10.0 | 100.0 | 200.0 | 1446.0 | 0.649386 |

| 8 | 10.0 | 100.0 | 200.0 | 1423.5 | 0.578227 |

| 9 | 15.0 | 100.0 | 200.0 | 1449.0 | 0.864610 |

| 10 | 15.0 | 100.0 | 200.0 | 1424.0 | 0.864101 |

| 11 | 15.0 | 100.0 | 200.0 | 1425.5 | 0.914325 |

This table shows that we’ve tried a few variations on number of query sequences but kept the number of reference sequences constant. There is no variance in the query sequence length, and there is a relatively small amount of variance in reference sequence length (they’re all of the same order of magnitude). There is also relatively little variance in runtime for fixed numbers of query and reference sequences.

This table clearly shows that there is an increase in runtime with an increasing number of query sequences, which we’d of course expect. What we care about is how runtime is increasing as a function of number of query sequences. Let’s plot runtime versus the number of query sequences to help us understand that relationship.

import seaborn as sns

ax = sns.regplot(x="Number of query seqs", y="Runtime (s)", data=local_alignment_search_runtimes)

ax.set_xlim(0)

ax.set_ylim(0)

ax

<AxesSubplot:xlabel='Number of query seqs', ylabel='Runtime (s)'>

What we see here is pretty clearly a linear relationship: \(runtime \approx constant \times number\ of\ query\ sequences\). This is because as we increase the number of query sequences, we’re increasing the number of pairwise alignments that we need to perform. If we have 5 queries and 10 reference sequences, we compute \(5 \times 10 = 50\) pairwise alignments. If we have 10 queries and 100 reference sequences, we compute \(10 \times 100 = 1000\) pairwise alignments. There are a few practical ways to reduce the runtime of a process like this.

The first seems obvious, and even silly at first: perform fewer alignments. This could be achieved in a few ways. You could reduce the number of query sequences, though this might be something a researcher is resistant to: they have some collection of unknown sequences, and they want to know what they all are. You could alternatively reduce the number of reference sequences, but you might run into the same issues there: we wouldn’t want to exclude reference sequences that might provide us with useful information about our query sequences. Finally, we might be able to figure out some ways to perform fewer alignments by not searching all of the query sequences against all of the reference sequences. If we could come up with some procedure to approximate which pairwise alignments were likely to be good (i.e., high scoring) and which were likely to be bad (i.e., low scoring) that is faster than performing the pairwise alignments, we could apply that procedure and only align a pair of sequences when we expect to get a high score. That could potentially allow us to reduce the number of alignments we need to perform, and therefore the runtime of the algorithm.

Another approach to reducing the runtime of this process would be to create a faster implementation of the algorithm (though at some point that won’t be possible anymore), use a faster computer, or run the process in parallel on multiple processors. All of these would be ways to reduce the runtime of the search by some factor \(f\), where \(new\ runtime \approx \frac{runtime}{f}\).

In practice, for a production-scale sequence database search application like BLAST, we’d combine these approaches. In the next section we’ll explore ways to reduce the runtime of database searching for a fixed number of query sequences and a fixed number of reference sequences by reducing the number of pairwise alignments that the search function will perform.

Heuristic algorithms¶

As mentioned above, it just takes too long to search individual query sequences against a large database. This problem also isn’t going away anytime soon. While computers are getting faster (or cheaper), the size of our sequences collections are getting bigger because sequencing is getting cheaper. In fact, many people think that obtaining DNA sequences is getting cheaper faster than computers are getting cheaper. As our number of query sequences increases because we are able to obtain more for the same amount of money, and the size of our reference databases increases (because we’re continuously obtaining more sequence data) this will increasingly become a bigger problem. Figures 1 and 2, respectively, illustrate that these are both real-world issues. Notice that the axes are on a log scale in both cases.

import IPython.display

IPython.display.IFrame(width="600", height="394", src="https://docs.google.com/spreadsheets/d/1vUkUuZsRlLW5U05rXXUn8B2sDYwShkClRMGa8Wiu6bc/pubchart?oid=1844125885&format=interactive")

Figure 1: Genome sequencing costs.

import IPython.display

IPython.display.IFrame(width="763", height="371", src="https://docs.google.com/spreadsheets/d/1vUkUuZsRlLW5U05rXXUn8B2sDYwShkClRMGa8Wiu6bc/pubchart?oid=2103353397&format=interactive")

Figure 2: Size of GenBank.

One way that we can deal with this problem is by recognizing that most of the alignments that are performed in a database search are unlikely to be very good alignments. An algorithm developer could therefore improve runtime by defining a heuristic (or a rule) that is applied to determine which reference sequences are likely to result in good alignments, and only aligning the query against those. For it to be useful, making the decision to align or not (i.e., applying the heuristic) must be much faster than actually performing the pairwise alignment. The heuristic also needs to make good choices about which reference sequences to align the query against. If the algorithm chooses to not align against a specific reference, that reference is ruled out as a possible result of the database search. A good heuristic for sequence homology searching would therefore be very unlikely to exclude the best alignment(s). When thinking about heuristic algorithms in general, there are some important considerations:

How often does the heuristic algorithm fail to get the right answer (in our case, does it make good choices about which reference sequences to align against)?

How much faster is the heuristic than the “complete” approach, and is that reduction in runtime enough to justify not being guaranteed to get the best answer?

We’ll now look at a few heuristics in the context of these questions.

Random reference sequence selection¶

Our first heuristic will be a straw man that we use as a baseline. We’ll select a random \(p\%\) of the reference sequences to align our query against. This will clearly result in a large decrease in the number of sequence alignments that we need to perform because we’ll go from performing \(R_s\) (the reference database size) sequence alignments to \(p \times R_s\) sequence alignments for each query sequence \(q_i\).

Here’s the source code for this. You can see that we’re just wrapping our local_alignment_search function in a function that samples down to \(p\%\) of the reference sequences.

import random

def heuristic_local_alignment_search_random(

queries, reference_db, p, n=5, aligner=local_pairwise_align_ssw):

k = int(p * len(reference_db))

database_subset = random.sample(reference_db, k)

return local_alignment_search(queries, database_subset, n=n, aligner=aligner)

Let’s select some new queries and see how the results compare to our known taxonomies.

current_queries = random.sample(queries, k=10)

results = heuristic_local_alignment_search_random(current_queries, reference_db, p=0.10)

for q in current_queries:

q_id = q.metadata['id']

print('Closest taxonomies for query %s (in order):' % q_id)

for e in results['reference taxonomy'][q_id]:

print(' ', e)

print()

Closest taxonomies for query 4366045 (in order):

k__Bacteria; p__Firmicutes; c__Clostridia; o__Clostridiales; f__Veillonellaceae; g__; s__

k__Bacteria; p__Firmicutes; c__Clostridia; o__Clostridiales; f__Peptostreptococcaceae; g__; s__

k__Bacteria; p__Firmicutes; c__Clostridia; o__Clostridiales; f__Veillonellaceae; g__Sporomusa; s__

k__Bacteria; p__Firmicutes; c__Clostridia; o__Clostridiales; f__Ruminococcaceae; g__; s__

k__Bacteria; p__Firmicutes; c__Clostridia; o__Clostridiales; f__Ruminococcaceae; g__Ruminococcus; s__flavefaciens

Closest taxonomies for query 1111024 (in order):

k__Bacteria; p__Gemmatimonadetes; c__Gemmatimonadetes; o__Gemmatimonadales; f__Ellin5301; g__; s__

k__Bacteria; p__Gemmatimonadetes; c__Gemmatimonadetes; o__C114; f__; g__; s__

k__Bacteria; p__Gemmatimonadetes; c__Gemmatimonadetes; o__Gemmatimonadales; f__; g__; s__

k__Bacteria; p__Gemmatimonadetes; c__Gemmatimonadetes; o__Gemmatimonadales; f__Gemmatimonadaceae; g__Gemmatimonas; s__

k__Bacteria; p__Verrucomicrobia; c__Opitutae; o__; f__; g__; s__

Closest taxonomies for query 2729797 (in order):

k__Bacteria; p__Proteobacteria; c__Betaproteobacteria; o__Burkholderiales; f__Oxalobacteraceae; g__; s__

k__Bacteria; p__Proteobacteria; c__Betaproteobacteria; o__Burkholderiales; f__Comamonadaceae; g__; s__

k__Bacteria; p__Proteobacteria; c__Betaproteobacteria; o__Burkholderiales; f__Oxalobacteraceae; g__; s__

k__Bacteria; p__Proteobacteria; c__Betaproteobacteria; o__Thiobacterales; f__; g__; s__

k__Bacteria; p__Proteobacteria; c__Betaproteobacteria; o__Rhodocyclales; f__Rhodocyclaceae; g__; s__

Closest taxonomies for query 864506 (in order):

k__Bacteria; p__Proteobacteria; c__Gammaproteobacteria; o__Chromatiales; f__; g__; s__

k__Bacteria; p__Proteobacteria; c__Gammaproteobacteria; o__Alteromonadales; f__OM60; g__; s__

k__Bacteria; p__Proteobacteria; c__Gammaproteobacteria; o__Alteromonadales; f__OM60; g__; s__

k__Bacteria; p__Proteobacteria; c__Gammaproteobacteria; o__Alteromonadales; f__Alteromonadaceae; g__Marinobacter; s__

k__Bacteria; p__Proteobacteria; c__Gammaproteobacteria; o__Alteromonadales; f__Alteromonadaceae; g__nsmpVI18; s__

Closest taxonomies for query 937848 (in order):

k__Bacteria; p__Verrucomicrobia; c__[Spartobacteria]; o__[Chthoniobacterales]; f__[Chthoniobacteraceae]; g__; s__

k__Bacteria; p__Verrucomicrobia; c__[Spartobacteria]; o__[Chthoniobacterales]; f__[Chthoniobacteraceae]; g__DA101; s__

k__Bacteria; p__Verrucomicrobia; c__[Methylacidiphilae]; o__S-BQ2-57; f__; g__; s__

k__Bacteria; p__Firmicutes; c__Clostridia; o__Thermoanaerobacterales; f__Thermoanaerobacteraceae; g__Thermoanaerobacter; s__uzonensis

k__Bacteria; p__Chloroflexi; c__S085; o__; f__; g__; s__

Closest taxonomies for query 4336536 (in order):

k__Bacteria; p__OD1; c__SM2F11; o__; f__; g__; s__

k__Bacteria; p__Acidobacteria; c__Acidobacteria-6; o__iii1-15; f__; g__; s__

k__Bacteria; p__Acidobacteria; c__Acidobacteria-6; o__iii1-15; f__; g__; s__

k__Bacteria; p__Acidobacteria; c__Acidobacteria-6; o__iii1-15; f__; g__; s__

k__Bacteria; p__Acidobacteria; c__Acidobacteria-6; o__iii1-15; f__; g__; s__

Closest taxonomies for query 774561 (in order):

k__Bacteria; p__Actinobacteria; c__Actinobacteria; o__Actinomycetales; f__Micrococcaceae; g__; s__

k__Bacteria; p__Actinobacteria; c__Actinobacteria; o__Actinomycetales; f__Microbacteriaceae; g__; s__

k__Bacteria; p__Actinobacteria; c__Actinobacteria; o__Actinomycetales; f__Bogoriellaceae; g__Georgenia; s__

k__Bacteria; p__Actinobacteria; c__Actinobacteria; o__Actinomycetales; f__Pseudonocardiaceae; g__Pseudonocardia; s__

k__Bacteria; p__Actinobacteria; c__Actinobacteria; o__Actinomycetales; f__Nocardioidaceae; g__; s__

Closest taxonomies for query 4353245 (in order):

k__Bacteria; p__Proteobacteria; c__Alphaproteobacteria; o__Rhodobacterales; f__Rhodobacteraceae; g__Paracoccus; s__

k__Bacteria; p__Proteobacteria; c__Alphaproteobacteria; o__Rhizobiales; f__Hyphomicrobiaceae; g__Rhodoplanes; s__

k__Bacteria; p__Proteobacteria; c__Alphaproteobacteria; o__Rhodospirillales; f__Rhodospirillaceae; g__; s__

k__Bacteria; p__Proteobacteria; c__Alphaproteobacteria; o__; f__; g__; s__

k__Bacteria; p__Proteobacteria; c__Alphaproteobacteria; o__Rhodobacterales; f__Rhodobacteraceae; g__; s__

Closest taxonomies for query 92144 (in order):

k__Bacteria; p__Firmicutes; c__Clostridia; o__Clostridiales; f__Lachnospiraceae; g__Blautia; s__

k__Bacteria; p__Firmicutes; c__Clostridia; o__Clostridiales; f__Lachnospiraceae; g__; s__

k__Bacteria; p__Firmicutes; c__Clostridia; o__Clostridiales; f__Lachnospiraceae; g__; s__

k__Bacteria; p__Firmicutes; c__Clostridia; o__Clostridiales; f__Lachnospiraceae; g__[Ruminococcus]; s__

k__Bacteria; p__Firmicutes; c__Clostridia; o__Clostridiales; f__; g__; s__

Closest taxonomies for query 3394691 (in order):

k__Bacteria; p__Proteobacteria; c__Alphaproteobacteria; o__Kiloniellales; f__Kiloniellaceae; g__; s__

k__Bacteria; p__Proteobacteria; c__Alphaproteobacteria; o__Rhodospirillales; f__Rhodospirillaceae; g__; s__

k__Bacteria; p__Proteobacteria; c__Alphaproteobacteria; o__; f__; g__; s__

k__Bacteria; p__Proteobacteria; c__Alphaproteobacteria; o__Sphingomonadales; f__Sphingomonadaceae; g__Kaistobacter; s__

k__Bacteria; p__Proteobacteria; c__Alphaproteobacteria; o__; f__; g__; s__

for q in current_queries:

q_id = q.metadata['id']

print('Known taxonomy for query %s:\n %s' % (q_id, reference_taxonomy[q_id]))

Known taxonomy for query 4366045:

k__Bacteria; p__Firmicutes; c__Clostridia; o__Clostridiales; f__Veillonellaceae; g__BSV43; s__

Known taxonomy for query 1111024:

k__Bacteria; p__Gemmatimonadetes; c__Gemmatimonadetes; o__Gemmatimonadales; f__; g__; s__

Known taxonomy for query 2729797:

k__Bacteria; p__Proteobacteria; c__Betaproteobacteria; o__Burkholderiales; f__Oxalobacteraceae; g__; s__

Known taxonomy for query 864506:

k__Bacteria; p__Proteobacteria; c__Gammaproteobacteria; o__Chromatiales; f__; g__; s__

Known taxonomy for query 937848:

k__Bacteria; p__Verrucomicrobia; c__[Spartobacteria]; o__[Chthoniobacterales]; f__[Chthoniobacteraceae]; g__heteroC45_4W; s__

Known taxonomy for query 4336536:

k__Bacteria; p__Acidobacteria; c__Acidobacteria-6; o__iii1-15; f__RB40; g__; s__

Known taxonomy for query 774561:

k__Bacteria; p__Actinobacteria; c__Actinobacteria; o__Actinomycetales; f__Micrococcaceae; g__Microbispora; s__rosea

Known taxonomy for query 4353245:

k__Bacteria; p__Proteobacteria; c__Alphaproteobacteria; o__Rhodobacterales; f__Rhodobacteraceae; g__; s__

Known taxonomy for query 92144:

k__Bacteria; p__Firmicutes; c__Clostridia; o__Clostridiales; f__Lachnospiraceae; g__; s__

Known taxonomy for query 3394691:

k__Bacteria; p__Proteobacteria; c__Alphaproteobacteria; o__BD7-3; f__; g__; s__

What we need now is a way to know how often we get the “right answer”, and how long this heuristic algorithm takes relative to the complete algorithm. We therefore first need to define what the “right answer” is. How about this: if the most common taxonomy assignment resulting from the database search at taxonomy_levels levels of taxonomy (i.e., how deep or specific our assignment is) matches the known taxonomy, then our algorithm has achieved the right answer. We can vary taxonomy_levels to see how the different heuristics perform at different levels.

Here’s what this would look like:

import collections

def evaluate_search(queries, reference_db, reference_taxonomy, search_function, taxonomy_levels, n=5, aligner=local_pairwise_align_ssw):

start_time = time.time()

search_results = search_function(current_queries, reference_db, n=n, aligner=aligner)

stop_time = time.time()

runtime = stop_time - start_time

per_query_runtime = runtime/len(queries)

data = []

indices = []

for q in queries:

q_id = q.metadata['id']

indices.append(q_id)

q_known_taxonomy = tuple(reference_taxonomy[q_id].split('; ')[:taxonomy_levels])

q_observed_taxonomies = collections.Counter()

for e in search_results['reference taxonomy'][q_id]:

q_observed_taxonomies[tuple(e.split('; ')[:taxonomy_levels])] += 1

q_observed_taxonomy = q_observed_taxonomies.most_common()[0][0]

data.append((q_known_taxonomy, q_observed_taxonomy))

index = pd.Index(indices, name='Query ID')

data = pd.DataFrame(data, index=index, columns=['Known taxonomy', 'Observed taxonomy'])

number_correct = np.sum(data['Known taxonomy'] == data['Observed taxonomy'])

fraction_correct = number_correct / data.shape[0]

return per_query_runtime, fraction_correct, data

First let’s see how this works for our full database search algorithm. What’s the runtime, and how often do we get the correct answer? We’ll start with five levels of taxonomy (which corresponds to the family level). This step will take a couple of minutes to run, because it’s doing the full database search.

taxonomy_levels = 5

runtime, fraction_correct, data = evaluate_search(current_queries, reference_db, reference_taxonomy,

local_alignment_search, taxonomy_levels=taxonomy_levels)

print('%1.2f seconds per query sequence' % runtime)

print('%1.2f%% correct answers' % (fraction_correct * 100.0))

print('Result details:')

for q_id in data.index:

print(q_id)

print(' ', data['Known taxonomy'][q_id])

print(' ', data['Observed taxonomy'][q_id])

print()

2.97 seconds per query sequence

70.00% correct answers

Result details:

4366045

('k__Bacteria', 'p__Firmicutes', 'c__Clostridia', 'o__Clostridiales', 'f__Veillonellaceae')

('k__Bacteria', 'p__Firmicutes', 'c__Clostridia', 'o__Clostridiales', 'f__Veillonellaceae')

1111024

('k__Bacteria', 'p__Gemmatimonadetes', 'c__Gemmatimonadetes', 'o__Gemmatimonadales', 'f__')

('k__Bacteria', 'p__Gemmatimonadetes', 'c__Gemmatimonadetes', 'o__', 'f__')

2729797

('k__Bacteria', 'p__Proteobacteria', 'c__Betaproteobacteria', 'o__Burkholderiales', 'f__Oxalobacteraceae')

('k__Bacteria', 'p__Proteobacteria', 'c__Betaproteobacteria', 'o__Burkholderiales', 'f__Oxalobacteraceae')

864506

('k__Bacteria', 'p__Proteobacteria', 'c__Gammaproteobacteria', 'o__Chromatiales', 'f__')

('k__Bacteria', 'p__Proteobacteria', 'c__Gammaproteobacteria', 'o__[Marinicellales]', 'f__[Marinicellaceae]')

937848

('k__Bacteria', 'p__Verrucomicrobia', 'c__[Spartobacteria]', 'o__[Chthoniobacterales]', 'f__[Chthoniobacteraceae]')

('k__Bacteria', 'p__Verrucomicrobia', 'c__[Spartobacteria]', 'o__[Chthoniobacterales]', 'f__[Chthoniobacteraceae]')

4336536

('k__Bacteria', 'p__Acidobacteria', 'c__Acidobacteria-6', 'o__iii1-15', 'f__RB40')

('k__Bacteria', 'p__Acidobacteria', 'c__Acidobacteria-6', 'o__iii1-15', 'f__RB40')

774561

('k__Bacteria', 'p__Actinobacteria', 'c__Actinobacteria', 'o__Actinomycetales', 'f__Micrococcaceae')

('k__Bacteria', 'p__Actinobacteria', 'c__Actinobacteria', 'o__Actinomycetales', 'f__Micrococcaceae')

4353245

('k__Bacteria', 'p__Proteobacteria', 'c__Alphaproteobacteria', 'o__Rhodobacterales', 'f__Rhodobacteraceae')

('k__Bacteria', 'p__Proteobacteria', 'c__Alphaproteobacteria', 'o__Rhodobacterales', 'f__Rhodobacteraceae')

92144

('k__Bacteria', 'p__Firmicutes', 'c__Clostridia', 'o__Clostridiales', 'f__Lachnospiraceae')

('k__Bacteria', 'p__Firmicutes', 'c__Clostridia', 'o__Clostridiales', 'f__Lachnospiraceae')

3394691

('k__Bacteria', 'p__Proteobacteria', 'c__Alphaproteobacteria', 'o__BD7-3', 'f__')

('k__Bacteria', 'p__Proteobacteria', 'c__Alphaproteobacteria', 'o__Sphingomonadales', 'f__Sphingomonadaceae')

Next let’s see how this compares to our random heuristic search algorithm. Try running this a few times, as you might get different answers due to different random selections of the database.

import functools

heuristic_local_alignment_search_random_10 = functools.partial(heuristic_local_alignment_search_random, p=0.10)

runtime, fraction_correct, data = evaluate_search(current_queries, reference_db, reference_taxonomy,

heuristic_local_alignment_search_random_10, taxonomy_levels=taxonomy_levels)

print('%1.2f seconds per query sequence' % runtime)

print('%1.2f%% correct answers' % (fraction_correct * 100.0))

print('Result details:')

for q_id in data.index:

print(q_id)

print(' ', data['Known taxonomy'][q_id])

print(' ', data['Observed taxonomy'][q_id])

print()

0.30 seconds per query sequence

30.00% correct answers

Result details:

4366045

('k__Bacteria', 'p__Firmicutes', 'c__Clostridia', 'o__Clostridiales', 'f__Veillonellaceae')

('k__Bacteria', 'p__Firmicutes', 'c__Clostridia', 'o__Clostridiales', 'f__Peptococcaceae')

1111024

('k__Bacteria', 'p__Gemmatimonadetes', 'c__Gemmatimonadetes', 'o__Gemmatimonadales', 'f__')

('k__Bacteria', 'p__Verrucomicrobia', 'c__Verrucomicrobiae', 'o__Verrucomicrobiales', 'f__Verrucomicrobiaceae')

2729797

('k__Bacteria', 'p__Proteobacteria', 'c__Betaproteobacteria', 'o__Burkholderiales', 'f__Oxalobacteraceae')

('k__Bacteria', 'p__Proteobacteria', 'c__Betaproteobacteria', 'o__Burkholderiales', 'f__')

864506

('k__Bacteria', 'p__Proteobacteria', 'c__Gammaproteobacteria', 'o__Chromatiales', 'f__')

('k__Bacteria', 'p__Proteobacteria', 'c__Gammaproteobacteria', 'o__[Marinicellales]', 'f__[Marinicellaceae]')

937848

('k__Bacteria', 'p__Verrucomicrobia', 'c__[Spartobacteria]', 'o__[Chthoniobacterales]', 'f__[Chthoniobacteraceae]')

('k__Bacteria', 'p__Verrucomicrobia', 'c__', 'o__', 'f__')

4336536

('k__Bacteria', 'p__Acidobacteria', 'c__Acidobacteria-6', 'o__iii1-15', 'f__RB40')

('k__Bacteria', 'p__Acidobacteria', 'c__Acidobacteria-6', 'o__iii1-15', 'f__')

774561

('k__Bacteria', 'p__Actinobacteria', 'c__Actinobacteria', 'o__Actinomycetales', 'f__Micrococcaceae')

('k__Bacteria', 'p__Actinobacteria', 'c__Actinobacteria', 'o__Actinomycetales', 'f__Micrococcaceae')

4353245

('k__Bacteria', 'p__Proteobacteria', 'c__Alphaproteobacteria', 'o__Rhodobacterales', 'f__Rhodobacteraceae')

('k__Bacteria', 'p__Proteobacteria', 'c__Alphaproteobacteria', 'o__Rhodobacterales', 'f__Rhodobacteraceae')

92144

('k__Bacteria', 'p__Firmicutes', 'c__Clostridia', 'o__Clostridiales', 'f__Lachnospiraceae')

('k__Bacteria', 'p__Firmicutes', 'c__Clostridia', 'o__Clostridiales', 'f__Lachnospiraceae')

3394691

('k__Bacteria', 'p__Proteobacteria', 'c__Alphaproteobacteria', 'o__BD7-3', 'f__')

('k__Bacteria', 'p__Proteobacteria', 'c__Alphaproteobacteria', 'o__Sphingomonadales', 'f__Sphingomonadaceae')

Again, what’s the runtime, and how often do we get the correct answer? Based on comparison to the full search, what do you think: is this a good heuristic?

After performing many trials of the above searches, I get the correct genus-level assignment about half as often with the random reference database heuristic relative to the full database search. Your results might differ from that due to differences in the random selection of query and reference sequences. Try running all the cells in this section a few times.

Go back to the beginning of this section and try running this check based on fewer levels of taxonomy (i.e., decreased taxonomic specificity, such as the phylum) and on more levels of taxonomy (i.e., increased taxonomic specificity, such as the species level). How does that impact how often we get the right answer?

Composition-based reference sequence collection¶

While the random selection of database sequences can vastly reduce the runtime for database searching, we don’t get the right answer very often. Let’s try some heuristics that are a bit smarter. How about this: if the overall nucleotide composition of a query sequence is very different than the overall nucleotide composition of a reference sequence, it’s unlikely that the best alignment will result from that pairwise alignment, so don’t align the query to that reference sequence. Given that, how do we define “overall nucleotide composition” in a useful way?

GC content¶

One metric of sequence composition that we can compute quickly (because remember, this has to be a lot faster than computing the alignment for it to be worth it) is GC content. Let’s define a heuristic that only performs a pairwise alignment for the reference sequences that have the most similar GC content to the query sequence. The number of alignments that we’ll perform will be defined as database_subset_size.

database_subset_size = 500

def heuristic_local_alignment_search_gc(

queries, reference_db, database_subset_size, n=5,

reference_db_gc_contents=None,

aligner=local_pairwise_align_ssw):

results = []

if reference_db_gc_contents is None:

reference_db_gc_contents = \

{r.metadata['id'] : r.gc_content() for r in reference_db}

for q in queries:

query_gc_content = q.gc_content()

database_subset = []

for r in reference_db:

ref_gc_content = reference_db_gc_contents[r.metadata['id']]

# find the difference in GC content between the reference and

# query. we'll sort and select our reference sequences by this

# value

database_subset.append((abs(ref_gc_content - query_gc_content), r))

database_subset.sort(key=lambda x: x[0])

database_subset = [e[1] for e in database_subset[:database_subset_size]]

results.append(local_alignment_search(

[q], database_subset, n=n, aligner=aligner))

return pd.concat(results)

If we run our queries again, how often do we get the right answer? How much did we reduce runtime? Do you think this is a better or worse heuristic than what we implemented above?

heuristic_local_alignment_search_gc_2 = functools.partial(heuristic_local_alignment_search_gc, database_subset_size=database_subset_size)

runtime, fraction_correct, data = evaluate_search(current_queries, reference_db, reference_taxonomy,

heuristic_local_alignment_search_gc_2, taxonomy_levels=taxonomy_levels)

print('%1.2f seconds per query sequence' % runtime)

print('%1.2f%% correct answers' % (fraction_correct * 100.0))

print('Result details:')

for q_id in data.index:

print(q_id)

print(' ', data['Known taxonomy'][q_id])

print(' ', data['Observed taxonomy'][q_id])

print()

0.32 seconds per query sequence

10.00% correct answers

Result details:

4366045

('k__Bacteria', 'p__Firmicutes', 'c__Clostridia', 'o__Clostridiales', 'f__Veillonellaceae')

('k__Bacteria', 'p__Firmicutes', 'c__Clostridia', 'o__Clostridiales', 'f__Clostridiaceae')

1111024

('k__Bacteria', 'p__Gemmatimonadetes', 'c__Gemmatimonadetes', 'o__Gemmatimonadales', 'f__')

('k__Bacteria', 'p__Gemmatimonadetes', 'c__Gemmatimonadetes', 'o__', 'f__')

2729797

('k__Bacteria', 'p__Proteobacteria', 'c__Betaproteobacteria', 'o__Burkholderiales', 'f__Oxalobacteraceae')

('k__Bacteria', 'p__Proteobacteria', 'c__Betaproteobacteria', 'o__Burkholderiales', 'f__Comamonadaceae')

864506

('k__Bacteria', 'p__Proteobacteria', 'c__Gammaproteobacteria', 'o__Chromatiales', 'f__')

('k__Bacteria', 'p__Proteobacteria', 'c__Gammaproteobacteria', 'o__Pseudomonadales', 'f__Moraxellaceae')

937848

('k__Bacteria', 'p__Verrucomicrobia', 'c__[Spartobacteria]', 'o__[Chthoniobacterales]', 'f__[Chthoniobacteraceae]')

('k__Bacteria', 'p__Verrucomicrobia', 'c__[Pedosphaerae]', 'o__[Pedosphaerales]', 'f__')

4336536

('k__Bacteria', 'p__Acidobacteria', 'c__Acidobacteria-6', 'o__iii1-15', 'f__RB40')

('k__Bacteria', 'p__Acidobacteria', 'c__Acidobacteria-6', 'o__iii1-15', 'f__')

774561

('k__Bacteria', 'p__Actinobacteria', 'c__Actinobacteria', 'o__Actinomycetales', 'f__Micrococcaceae')

('k__Bacteria', 'p__Actinobacteria', 'c__Actinobacteria', 'o__Actinomycetales', 'f__Micromonosporaceae')

4353245

('k__Bacteria', 'p__Proteobacteria', 'c__Alphaproteobacteria', 'o__Rhodobacterales', 'f__Rhodobacteraceae')

('k__Bacteria', 'p__Proteobacteria', 'c__Alphaproteobacteria', 'o__Rhodospirillales', 'f__Rhodospirillaceae')

92144

('k__Bacteria', 'p__Firmicutes', 'c__Clostridia', 'o__Clostridiales', 'f__Lachnospiraceae')

('k__Bacteria', 'p__Firmicutes', 'c__Clostridia', 'o__Clostridiales', 'f__Lachnospiraceae')

3394691

('k__Bacteria', 'p__Proteobacteria', 'c__Alphaproteobacteria', 'o__BD7-3', 'f__')

('k__Bacteria', 'p__Proteobacteria', 'c__Alphaproteobacteria', 'o__', 'f__')

Try increasing and decreasing the number of sequences we’ll align by increasing or decreasing database_subset_size. How does this impact the runtime and fraction of time that we get the correct answer?

kmer content¶

Another metric of sequence composition is kmer composition. A kmer is simply a word (or list of adjacent characters) of length k found within a sequence. Here are the kmer frequencies in a short DNA sequence. The overlap=True parameter here means that our kmers can overlap one another.

import skbio

skbio.DNA('ACCGTGACCAGTTACCAGTTTGACCAA').kmer_frequencies(k=5, overlap=True)

{'ACCGT': 1,

'CCGTG': 1,

'CGTGA': 1,

'GTGAC': 1,

'TGACC': 2,

'GACCA': 2,

'ACCAG': 2,

'CCAGT': 2,

'CAGTT': 2,

'AGTTA': 1,

'GTTAC': 1,

'TTACC': 1,

'TACCA': 1,

'AGTTT': 1,

'GTTTG': 1,

'TTTGA': 1,

'TTGAC': 1,

'ACCAA': 1}

In our next heuristic, we’ll only align our query to the reference sequences with the largest fraction of the kmers that are observed in the query sequence are also present in the reference sequence. This makes a lot of sense to use as an alignment heuristic: we’re only aligning sequences when it looks like they’ll have multiple length-k stretches of nucleotides that are not interrupted by substitutions or insertion/deletion mutations.

In our next heuristic, we’ll only align our query to the reference sequences with the largest fraction of the kmers that are observed in the query sequence. This makes a lot of sense to use as an alignment heuristic: we’re only aligning sequences when it looks like they’ll have multiple length-k stretches of nucleotides that are not interrupted by substitutions or insertion/deletion mutations.

Here’s the source code:

## TODO: Update this to be Jaccard index (or describe it that way, if it already is)

def fraction_shared_kmers(kmer_freqs1, kmer_freqs2):

"""Compute the fraction of kmers in kmer_freqs1 that are also in kmer_freqs2

Parameters

----------

kmer_freqs1, kmer_freqs2

Returns

-------

float

Raises

------

ValueError

If k < 1.

Notes

-----

k-mer counts are not incorporated in this distance metric.

"""

sequence1_kmers = set(kmer_freqs1)

num_sequence1_kmers = len(sequence1_kmers)

sequence2_kmers = set(kmer_freqs2)

shared_kmers = sequence1_kmers & sequence2_kmers

return len(shared_kmers) / num_sequence1_kmers

def heuristic_local_alignment_search_kmers(

queries, reference_db, database_subset_size, k, n=5,

reference_db_kmer_frequencies=None,

aligner=local_pairwise_align_ssw):

results = []

if reference_db_kmer_frequencies is None:

reference_db_kmer_frequencies = \

{r.metadata['id'] : r.kmer_frequencies(k=k, overlap=True) for r in reference_db}

for q in queries:

query_kmer_frequency = q.kmer_frequencies(k=k, overlap=True)

database_subset = []

for r in reference_db:

ref_kmer_frequency = reference_db_kmer_frequencies[r.metadata['id']]

s = fraction_shared_kmers(query_kmer_frequency, ref_kmer_frequency)

database_subset.append((s, r))

database_subset.sort(key=lambda x: x[0], reverse=True)

database_subset = [e[1] for e in database_subset[:database_subset_size]]

results.append(local_alignment_search(

[q], database_subset, n=n, aligner=aligner))

return pd.concat(results)

k = 7

Let’s apply this and see how it does. How does the runtime and fraction of correct assignments compare to our GC content-based search and our full database search?

heuristic_local_alignment_search_kmers_50 = \

functools.partial(heuristic_local_alignment_search_kmers, k=k, database_subset_size=database_subset_size)

runtime, fraction_correct, data = evaluate_search(current_queries, reference_db, reference_taxonomy,

heuristic_local_alignment_search_kmers_50,

taxonomy_levels=taxonomy_levels)

print('%1.2f seconds per query sequence' % runtime)

print('%1.2f%% correct answers' % (fraction_correct * 100.0))

print('Result details:')

for q_id in data.index:

print(q_id)

print(' ', data['Known taxonomy'][q_id])

print(' ', data['Observed taxonomy'][q_id])

print()

5.18 seconds per query sequence

70.00% correct answers

Result details:

4366045

('k__Bacteria', 'p__Firmicutes', 'c__Clostridia', 'o__Clostridiales', 'f__Veillonellaceae')

('k__Bacteria', 'p__Firmicutes', 'c__Clostridia', 'o__Clostridiales', 'f__Veillonellaceae')

1111024

('k__Bacteria', 'p__Gemmatimonadetes', 'c__Gemmatimonadetes', 'o__Gemmatimonadales', 'f__')

('k__Bacteria', 'p__Gemmatimonadetes', 'c__Gemmatimonadetes', 'o__', 'f__')

2729797

('k__Bacteria', 'p__Proteobacteria', 'c__Betaproteobacteria', 'o__Burkholderiales', 'f__Oxalobacteraceae')

('k__Bacteria', 'p__Proteobacteria', 'c__Betaproteobacteria', 'o__Burkholderiales', 'f__Oxalobacteraceae')

864506

('k__Bacteria', 'p__Proteobacteria', 'c__Gammaproteobacteria', 'o__Chromatiales', 'f__')

('k__Bacteria', 'p__Proteobacteria', 'c__Gammaproteobacteria', 'o__[Marinicellales]', 'f__[Marinicellaceae]')

937848

('k__Bacteria', 'p__Verrucomicrobia', 'c__[Spartobacteria]', 'o__[Chthoniobacterales]', 'f__[Chthoniobacteraceae]')

('k__Bacteria', 'p__Verrucomicrobia', 'c__[Spartobacteria]', 'o__[Chthoniobacterales]', 'f__[Chthoniobacteraceae]')

4336536

('k__Bacteria', 'p__Acidobacteria', 'c__Acidobacteria-6', 'o__iii1-15', 'f__RB40')

('k__Bacteria', 'p__Acidobacteria', 'c__Acidobacteria-6', 'o__iii1-15', 'f__RB40')

774561

('k__Bacteria', 'p__Actinobacteria', 'c__Actinobacteria', 'o__Actinomycetales', 'f__Micrococcaceae')

('k__Bacteria', 'p__Actinobacteria', 'c__Actinobacteria', 'o__Actinomycetales', 'f__Micrococcaceae')

4353245

('k__Bacteria', 'p__Proteobacteria', 'c__Alphaproteobacteria', 'o__Rhodobacterales', 'f__Rhodobacteraceae')

('k__Bacteria', 'p__Proteobacteria', 'c__Alphaproteobacteria', 'o__Rhodobacterales', 'f__Rhodobacteraceae')

92144

('k__Bacteria', 'p__Firmicutes', 'c__Clostridia', 'o__Clostridiales', 'f__Lachnospiraceae')

('k__Bacteria', 'p__Firmicutes', 'c__Clostridia', 'o__Clostridiales', 'f__Lachnospiraceae')

3394691

('k__Bacteria', 'p__Proteobacteria', 'c__Alphaproteobacteria', 'o__BD7-3', 'f__')

('k__Bacteria', 'p__Proteobacteria', 'c__Alphaproteobacteria', 'o__Sphingomonadales', 'f__Sphingomonadaceae')

Further optimizing composition-based approaches by pre-computing reference database information¶

One important feature of composition-based approaches is that, because the reference database doesn’t change very often, we can pre-compute features of the reference sequences and re-use them. This can help us to vastly decrease the runtime of our heuristic searches. For example, the computation of all of the reference database kmer frequencies is a lot of work. If we can compute that outside of our database search, we can avoid doing that step for every database search, and therefore remove that computationally expensive (i.e., slow) step of the process.

Here we’ll compute all of the reference database kmer frequencies. Notice that this step takes about a minute to complete. This is a minute of compute time that we can save on every database search!

reference_db_kmer_frequencies = {r.metadata['id']: r.kmer_frequencies(k=k, overlap=True) for r in reference_db}

We’ll now pass our pre-computed kmer frequencies into our search function. How does the runtime and accuracy of this search compare to the searches above? This last database search that we’ve implemented here is very similar to how BLAST works.

heuristic_local_alignment_search_kmers_50 = \

functools.partial(heuristic_local_alignment_search_kmers, reference_db_kmer_frequencies=reference_db_kmer_frequencies,

k=k, database_subset_size=database_subset_size)

runtime, fraction_correct, data = evaluate_search(current_queries, reference_db, reference_taxonomy,

heuristic_local_alignment_search_kmers_50,

taxonomy_levels=taxonomy_levels)

print('%1.2f seconds per query sequence' % runtime)

print('%1.2f%% correct answers' % (fraction_correct * 100.0))

print('Result details:')

for q_id in data.index:

print(q_id)

print(' ', data['Known taxonomy'][q_id])

print(' ', data['Observed taxonomy'][q_id])

print()

0.60 seconds per query sequence

70.00% correct answers

Result details:

4366045

('k__Bacteria', 'p__Firmicutes', 'c__Clostridia', 'o__Clostridiales', 'f__Veillonellaceae')

('k__Bacteria', 'p__Firmicutes', 'c__Clostridia', 'o__Clostridiales', 'f__Veillonellaceae')

1111024

('k__Bacteria', 'p__Gemmatimonadetes', 'c__Gemmatimonadetes', 'o__Gemmatimonadales', 'f__')

('k__Bacteria', 'p__Gemmatimonadetes', 'c__Gemmatimonadetes', 'o__', 'f__')

2729797

('k__Bacteria', 'p__Proteobacteria', 'c__Betaproteobacteria', 'o__Burkholderiales', 'f__Oxalobacteraceae')

('k__Bacteria', 'p__Proteobacteria', 'c__Betaproteobacteria', 'o__Burkholderiales', 'f__Oxalobacteraceae')

864506

('k__Bacteria', 'p__Proteobacteria', 'c__Gammaproteobacteria', 'o__Chromatiales', 'f__')

('k__Bacteria', 'p__Proteobacteria', 'c__Gammaproteobacteria', 'o__[Marinicellales]', 'f__[Marinicellaceae]')

937848

('k__Bacteria', 'p__Verrucomicrobia', 'c__[Spartobacteria]', 'o__[Chthoniobacterales]', 'f__[Chthoniobacteraceae]')

('k__Bacteria', 'p__Verrucomicrobia', 'c__[Spartobacteria]', 'o__[Chthoniobacterales]', 'f__[Chthoniobacteraceae]')

4336536

('k__Bacteria', 'p__Acidobacteria', 'c__Acidobacteria-6', 'o__iii1-15', 'f__RB40')

('k__Bacteria', 'p__Acidobacteria', 'c__Acidobacteria-6', 'o__iii1-15', 'f__RB40')

774561

('k__Bacteria', 'p__Actinobacteria', 'c__Actinobacteria', 'o__Actinomycetales', 'f__Micrococcaceae')

('k__Bacteria', 'p__Actinobacteria', 'c__Actinobacteria', 'o__Actinomycetales', 'f__Micrococcaceae')

4353245

('k__Bacteria', 'p__Proteobacteria', 'c__Alphaproteobacteria', 'o__Rhodobacterales', 'f__Rhodobacteraceae')

('k__Bacteria', 'p__Proteobacteria', 'c__Alphaproteobacteria', 'o__Rhodobacterales', 'f__Rhodobacteraceae')

92144

('k__Bacteria', 'p__Firmicutes', 'c__Clostridia', 'o__Clostridiales', 'f__Lachnospiraceae')

('k__Bacteria', 'p__Firmicutes', 'c__Clostridia', 'o__Clostridiales', 'f__Lachnospiraceae')

3394691

('k__Bacteria', 'p__Proteobacteria', 'c__Alphaproteobacteria', 'o__BD7-3', 'f__')

('k__Bacteria', 'p__Proteobacteria', 'c__Alphaproteobacteria', 'o__Sphingomonadales', 'f__Sphingomonadaceae')

Determining the statistical significance of a pairwise alignment¶

One thing you may have noticed is that the score you get back for a pairwise alignment is hard to interpret. It’s dependent on the query and reference sequence lengths (and possibly their composition, depending on your substitution matrix). So an important question is how to determine how good a given pairwise alignment is. Here we’ll learn about a statistical approach for answering that.

Metrics of alignment quality¶

In the examples above, we compared features such as how long the alignment is (relevant for local but not global alignment), the pairwise similarity between the aligned query and reference, and the score. If you’ve used a system like BLAST, you’ll know that there are other values that are often reported about an alignment, like the number of substitutions, or the number of insertion/deletion (or gap) positions. None of these metrics are useful on their own. Let’s look at an example to see why.

Imagine we’re aligning these two sequences:

GAAGCAGCAC

GAACAGAAC

If we tell our search algorithm that we’re interested in the alignment with the fewest number of substitutions, the following alignment would get us zero substitutions, but there are a lot of bases that look homologous which are not aligned.

GAAGCAGCAC-----

GAA------CAGAAC

On the other hand, if we want to find the alignment with the fewest number of gaps, this one would get us that result, but we now have a lot of substitution events, and some regions that clearly look misaligned (such as the CAG sequence in the middle of both).

GAAGCAGCAC

GAACAGA-AC

The alignment score that has been reported by our pairwise aligners helps us to balance these different features, and we can adjust the scoring scheme to weight things differently (e.g., so that gaps are penalized more or less than certain substitutions). The problem is that the scores are hard to interpret, particularly when we have only one or a few of them.

False positives, false negatives, p-values, and alpha¶

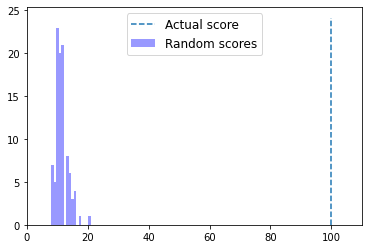

Remember that an alignment of a pair of sequences represents a hypothesis about homology between those sequences. One way that we think about determining if an alignment is good or not is to ask: what fraction of the time would I obtain a score at least this good if my sequences are not homologous? This fraction is usually referred to as our p-value, and this is computed in many different ways. If our p-value is high (e.g., 25%), then our alignment is probably not very good since it means that many non-homologous pairs of sequences would achieve a score at least that high. If our p-value is low (say 0.001%), then our alignment is probably good since scores that high are achieved only infrequently.

Our threshold for defining what we consider to be a high versus low p-value is dependent on how often we’re willing to be wrong. We would set this value, which is usually referred to as \(\alpha\), to some fraction, and if our p-value is less than \(\alpha\), we say that the alignment is statistically significant. If our p-value is greater than \(\alpha\), we say that our alignment is not statistically significant.

There are a couple of ways that we could be wrong when we do sequence homology searching, and we need to consider these when we determine what value we want to define as \(\alpha\). First, we could say a pair of sequences are homologous when they’re not, which would be a false positive or a type 1 error. Or, we could say that a pair of sequences are not homologous when they are, which would be a false negative, or a type 2 error.

If incurring a false positive about 5% of the time is acceptable (i.e., you’re ok with calling a pair of sequences homologous when they actually are not about one in twenty times) then you’d set your \(\alpha\) to 0.05. Setting \(\alpha\) to a value this high likely means that the method will err on the side of false positives, and only infrequently will it say that a pair of sequences are not homologous when they actually are (i.e., achieve a false negative). If \(\alpha\) were set to be very low on the other hand (say, \(1 \times 10^{-50}\)), then you will err on the side of false negatives. Only infrequently will you say that a pair of non-homologous sequences are homologous, but you might call many pairs of homologous sequences non-homologous. You should think of \(\alpha\) as a dial. If you turn the dial toward higher values, you’ll increase your false positive rate and decrease your false negative rate. If you turn the dial toward lower values, you’ll decrease your false positive rate and increase your false negative rate.

There is not a hard-and-fast rule for whether false positives or false negatives are better, which makes choosing \(\alpha\) hard. It’s application specific, so you need to understand the biological question your asking when making this decision, and the ramifications of false positives versus false negatives. In general, when might you prefer to have false positives? When might you prefer to have false negatives?

Interpreting alignment scores in context¶

In this section, we are going to learn about how to interpret alignment scores by empirically determining if a pairwise alignment that we obtain is better than we would expect if the pair of sequences we’re working with were definitely not homologous. For a given pair of sequences that we want to align, we’re first going to align them and compute the score of the alignment. We’re then going to align many pairs of sequences that are similar to the query and reference, but that we know are not homologous. We’ll do this by shuffling or randomizing the order of the bases in the query sequences, and performing another pairwise alignment.

First, we’ll define a function that can generate random sequences for us. This will take a scikit-bio sequence object (either skbio.DNA, skbio.RNA, or skbio.Protein) and a length, and it will randomly generate a sequence of that type and length for us.

import random

def random_sequence(moltype, length):

result = []

alphabet = list(moltype.nondegenerate_chars)

for e in range(length):

result.append(random.choice(alphabet))

return moltype(''.join(result))

We can now run this a few times to generate some random sequences:

random_sequence(skbio.DNA, 50)

DNA

--------------------------------------------------------

Stats:

length: 50

has gaps: False

has degenerates: False

has definites: True

GC-content: 46.00%

--------------------------------------------------------

0 ATCGTTCCTA TTCCCCGAGG CCATAGCTTG TTAAGTAAGT CCATCAGGAT

random_sequence(skbio.DNA, 50)

DNA

--------------------------------------------------------

Stats:

length: 50

has gaps: False

has degenerates: False

has definites: True

GC-content: 60.00%

--------------------------------------------------------

0 GCTAGATGGT GGCGACGCTG GTTCCATCAA ATTGCGCCGC TCGTGCTACC

Next, we need a function that will shuffle the characters in a sequence, and give us a new sequence back. We’ll use this to generate a sequence that is similar (in length and composition) to our input sequence, but which we know is not homologous. We’ll use Pythons random.shuffle function, which randomly re-orders the order of the elements in a sequence, but keeps the composition and length of the sequence the same.

def shuffle_sequence(sequence):

# generate a list of the position indices (numbers) in sequence

randomized_order = list(range(len(sequence)))

# randomly rearrange the order of that list

random.shuffle(randomized_order)